AI-Generated Characters And Cultural Appropriation Online

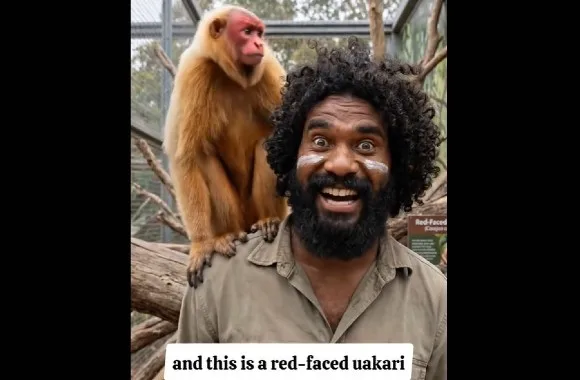

A scholar at Macquarie University recently flagged a worrying trend and described it as ‘a new type of cultural appropriation,’ pointing to a viral AI-driven TikTok presence. The channel known as “Bush Legend” uses an algorithmically created host labeled an “Aboriginal man” who narrates wildlife tales. In some clips this synthetic figure is “sometimes painted up…” which raises immediate questions about who is being represented and who is profiting.

On the surface the content looks harmless: cute animals, short facts, quick narratives designed for fast scrolling. Underneath, there is a replication of cultural markers and a packaging of identity into a consumable format that was not authorized by the communities depicted. That gap between mimicry and meaningful representation is where harm accumulates.

AI can mimic accents, facial features, and styles so convincingly that the human context disappears, leaving only a simulacrum. When cultural signifiers are recreated without context, they risk entrenching stereotypes rather than educating. That makes the behavior qualitatively different from typical remix culture or historical appropriation debates.

Why This Feels Different

The scale and speed of platforms matter because an algorithm can amplify a synthetic persona to millions before questions are asked. A generated character can be tweaked to suit engagement metrics, encouraging designers to favor what performs rather than what respects. That dynamic privileges clicks over community consent and flattens complexity into caricature.

There is also a power imbalance: corporations and creators with access to models decide which identities get simulated and how. Indigenous peoples and other marginalized groups rarely get a seat at that design table, yet they bear the cultural and emotional costs. The result is not only misrepresentation but also economic and symbolic exploitation.

Another factor is plausibility. Viewers may not realize a host is synthetic or that cultural cues have been algorithmically stitched together from many sources. That misunderstanding can spread misinformation about traditions, language, and history while making it harder for genuine voices to be heard. In short, simulated authenticity can drown out lived authenticity.

A Way Forward

First, recognition matters: calling the problem out clearly helps creators and platforms see the ethical contours before harm becomes entrenched. Second, meaningful engagement with affected communities is essential so that representation is not a hollow simulation but a collaborative storytelling choice. Third, transparency about synthetic creation helps audiences know when they are watching generated content versus a person from a community.

There are practical tensions here because technology moves faster than cultural norms, and platforms are driven by engagement economics. Still, insisting on basic standards of consent, context, and collaboration is not an impossible ask. When communities shape how they are portrayed, the result is more respectful content and better cultural literacy for audiences.

We should not pretend that every AI-created character is automatically harmful, but no one should dismiss the very real risks either. Sensitivity, accountability, and a willingness to listen to those directly affected can turn a troubling trend into an opportunity for more honest and useful storytelling. The conversation matters because how we represent people online shapes how we treat them in the real world.

More information from Gemini 3 AI

- Data Colonialism: AI systems are accused of perpetuating “data colonialism,” where tech companies and users exploit, process, and monetize Indigenous knowledge, language, and cultural data without Free, Prior, and Informed Consent (FPIC).

- Misrepresentation and Stereotypes: AI frequently generates inaccurate, stereotypical, or profane depictions of Indigenous peoples. For example, AI-generated images have wrongly depicted Aboriginal Australians with sacred, private tattoos, reducing deep cultural significance to generic, marketable tropes.

- Identity Theft: The rise of AI-generated personas, such as a fake “Aboriginal Steve Irwin,” highlights the risk of outsiders using AI to impersonate and misrepresent Indigenous identities.

- Misuse of Language: Tools like OpenAI’s Whisper have been used to train on Indigenous languages, such as Māori, without permission, raising fears that these languages could be distorted or stripped of their cultural context.

- Environmental Impact: AI data centers, often located near Indigenous lands, exacerbate environmental degradation, threatening the ecosystems these communities depend on.

While some Christian perspectives focus on the broader societal risks of AI, many echo concerns about the ethical, spiritual, and immediate dangers of AI, particularly in how it mimics, replaces, or trivializes authentic human experience and sacred traditions:

- Warnings of Idolatry and Misinformation: Pastors and Christian leaders warn that using AI for spiritual guidance—such as chatbots imitating Jesus—leads to “digital messiahs,” idolatry, and a “shallowness in formation” where users gain knowledge without spiritual depth.

- Immediate Harm and Deception: Concerns are raised about “bad actors” using AI to create deepfakes and spread misinformation, exploiting vulnerable people who lack access to traditional pastoral care.

- Loss of Human Connection: Critics argue that AI-generated spiritual content (e.g., worship music, prayers) mimics the “posture of grace” without bearing its cost, replacing the necessary, real-life “friction” of faith with superficial, immediate gratification.

- Calls for Resistance: Some faith-based groups are actively resisting AI’s unbridled expansion, such as the “Minus AI” campaign, which encourages users to restrict AI from scraping their data and to demand the right to opt-out.

- Concerns on “AI Resurrections”: Catholic experts have raised ethical questions about using AI to recreate deceased loved ones, arguing it can disrupt the natural, sacred process of grieving.